Google Maps Scraping for Regional Performance Insights

Local Marketing

May 31, 2025

May 31, 2025

Learn how Google Maps scraping can enhance regional marketing strategies by providing valuable business insights and performance metrics.

Google Maps scraping is a powerful way to gather business data like names, addresses, reviews, and more to improve regional marketing strategies. By automating data collection, businesses can identify competitors, analyze customer sentiment, and find growth opportunities in specific areas. Here's what you need to know:

Why it matters: Regional data helps businesses benchmark performance, tailor services to local needs, and optimize ad spend.

Who benefits: Local service industries like HVAC, landscaping, and janitorial services can use this data to generate leads, track competitors, and improve outreach.

How to start: Tools like Google Maps Scraper ($4/1,000 places), Outscraper (free for 500 businesses), or Google Maps API can extract data efficiently.

Key metrics: Focus on conversion rates, cost per lead, customer lifetime value, and review response rates to measure success.

Legal considerations: Scraping must comply with Google’s terms and laws like the CFAA and CCPA to avoid legal issues.

Actionable insights: Use heat maps, adjust service areas, and monitor real-time data to refine campaigns.

Scraping Google Maps data offers actionable insights to grow your business, but it’s crucial to use ethical practices and stay compliant. Ready to dive in? Let’s explore the tools, techniques, and strategies in detail.

How to Scrape Google Maps Data

Tools and Technology You Need

If you're looking to scrape Google Maps data, the right tools can save you a lot of time and effort. Several platforms offer solutions that handle the technical heavy lifting for you, making it easier to gather the data you need.

Google Maps Scraper is a cost-effective option, charging $4.00 for every 1,000 places scraped [1]. This tool allows you to extract data from specific locations using URLs or place IDs, or even scrape entire areas by setting search and location parameters. One of its standout features is the ability to bypass Google Maps' usual limit of displaying only 120 places per area, giving you access to a broader dataset [1].

Google Maps Extractor is another option, known for its speed and efficiency. Priced at $6.00 per 1,000 results, it’s optimized for large-scale data collection, making it a solid choice for those with bigger scraping needs [6].

For those just starting out, Outscraper offers a user-friendly experience, including free access for the first 500 businesses [3]. Users have praised its reliability and ease of use. One satisfied user, Ian Mason, CTO, shared:

"Outscraper is the best scraper I've found. I cannot believe how well it works, how much data I get back, with no errors or headaches. I can make any directory site I want now."

– Ian Mason, CTO [3]

These tools can pull a wide range of business details, such as contact information, location data, opening hours, customer reviews, images, and even gas prices [1]. You can define the areas to scrape using descriptions, coordinates, geolocation parameters, or direct Google Maps URLs.

Alternatively, the Google Maps API provides a more traditional approach with three search options: Find Place, Nearby Search, and Text Search [7]. The Google Maps Datasets API allows you to create and manage datasets through REST API functionality [4]. However, specialized tools often provide access to additional data, like popular times and histograms, which aren’t available through the standard API [5].

Once you've chosen your tools, the next step is ensuring that the data you collect is accurate and reliable.

How to Ensure Data Accuracy

Scraped data is only as good as its accuracy. To maintain high-quality datasets, you’ll need to implement thorough validation processes [8]. Start by setting up field validation rules to ensure that the data aligns with your predefined formats and types.

Automated verification systems are invaluable for spotting errors or inconsistencies. Techniques like checksums or hashing can help verify data integrity. Combining these automated methods with manual spot-checks adds an extra layer of reliability [8].

Cross-referencing your scraped data with trusted sources can further ensure its accuracy [8]. Keep in mind that websites often change their layouts and structures, so regular monitoring and adjustments to your scraping methods are essential [9].

To maintain clean datasets, eliminate duplicates and establish error recovery protocols. Detailed logs of changes and errors will help you refine your scraping process over time.

When conducting searches, use smaller, non-overlapping sets of search terms and include all relevant categories and synonyms. For location-based searches, provide detailed geolocation parameters, such as country, state, city, and postal code, to improve precision [1][6].

Once your data meets quality standards, it’s crucial to address the legal and ethical considerations of scraping.

Legal and Ethical Guidelines

Scraping Google Maps data comes with legal and ethical responsibilities. Google's Terms of Service explicitly forbid automated scraping, and violating these terms can lead to account suspension or even legal action [12]. Following these rules not only keeps you out of trouble but also ensures your data collection practices remain transparent and responsible.

In the U.S., the Computer Fraud and Abuse Act (CFAA) governs web scraping activities. This federal law prohibits unauthorized access to computer systems. However, landmark cases like HiQ Labs vs. LinkedIn have clarified that accessing publicly available data doesn’t always violate the CFAA. In 2021, the U.S. Supreme Court further ruled that accessing a system for an improper purpose doesn’t breach the CFAA if access was otherwise authorized [12].

State-level regulations, such as the California Consumer Privacy Act (CCPA), also impose requirements. For instance, businesses must address consumer requests about their personal data and provide opt-out options if scraped data is sold. Strong data governance policies are essential for managing data collection, storage, and deletion [11].

Copyright laws can also come into play, especially since business listings and user reviews are often considered intellectual property. Unauthorized scraping could result in copyright infringement claims. While public data may be protected under First Amendment rights, ethical practices are still a must.

To stay compliant, consider conducting a Data Protection Impact Assessment (DPIA) before starting any scraping project. This helps evaluate potential privacy risks [11]. Anonymize or aggregate sensitive data whenever possible, and always seek proper authorization when scraping user-generated content [10].

On the technical side, stick to best practices by respecting rate limits, avoiding excessive requests, and using IP rotation to prevent disruptions [13]. Regularly update your scraping tools to adapt to website changes and maintain compliance [10].

For the most straightforward path to compliance, seek explicit permission from Google or the data owners before beginning any scraping activities [12].

Scrape UNLIMITED Free Google Maps Leads & Automate Personalized Outreach!

How to Analyze Regional Performance Data

Turn your collected data into meaningful insights that can shape smarter campaign strategies. By starting with reliable and compliant data-gathering practices, you can use these analytical methods to uncover valuable regional trends. Once you have quality data, focus on metrics that highlight where each region excels or falls short.

Key Metrics to Track

To understand regional performance, keep an eye on these crucial metrics: local conversion rates, cost per lead, return on ad spend (ROAS), customer lifetime value (CLV), search visibility, and review response rates. For instance, while the average ROAS for Google Ads is around 2x, well-optimized Local Services Ads can exceed 3x ROAS [15].

Local search visibility is especially important, as over 40% of all Google searches are tied to local intent [14]. If you’re using Google Local Services Ads, pay close attention to your response rate. Google cautions that “if you regularly fail to answer calls or respond to messages, your ad ranking may be affected” [15]. Also, don’t underestimate the power of reviews - 77% of consumers say they "always" or "regularly" read reviews when considering local businesses [15].

However, there’s a gap in tracking: only 27% of marketers are currently measuring sales revenue tied directly to location marketing efforts [14].

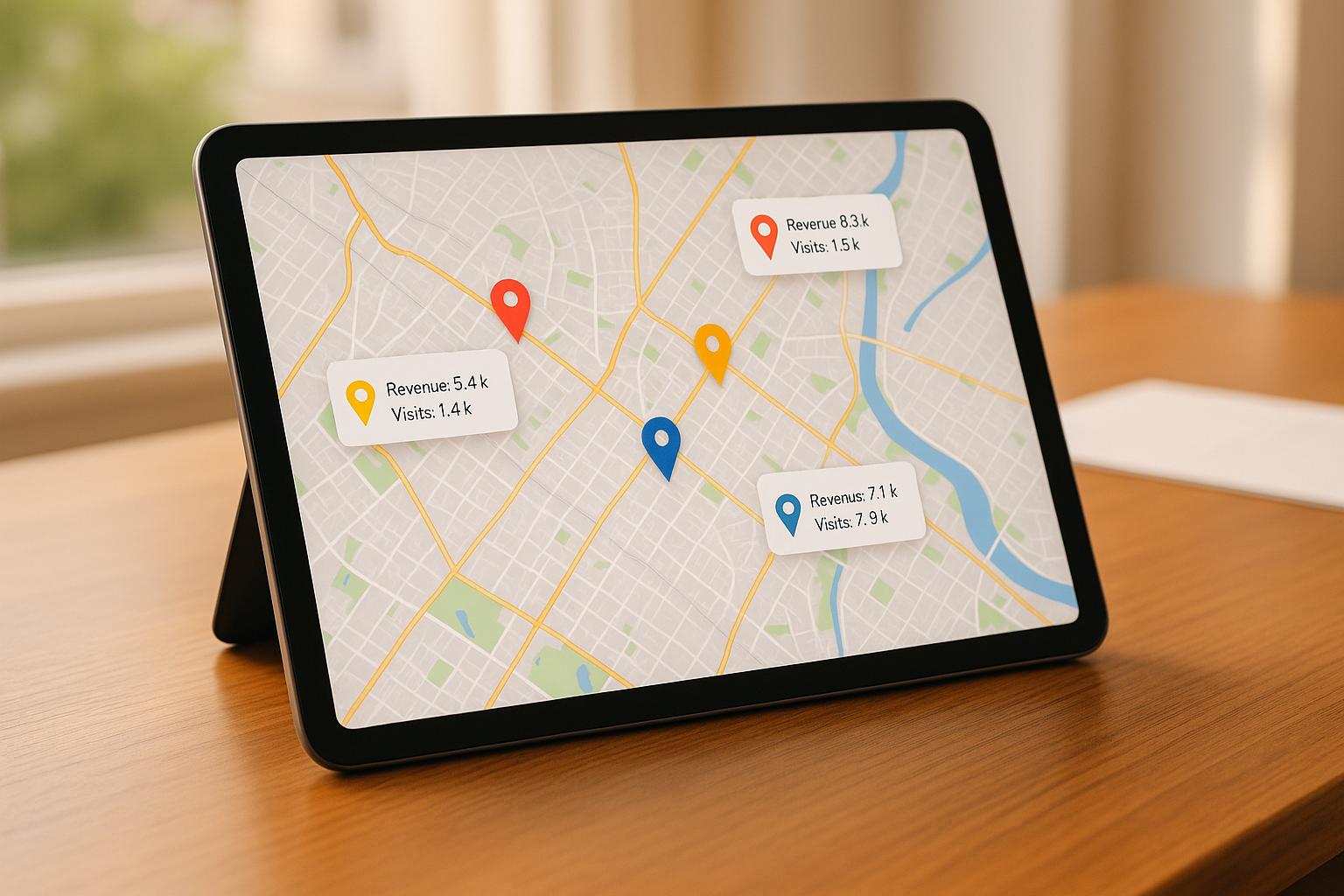

Creating Heat Maps and Visual Reports

Heat maps are a great way to visualize regional trends like conversion rates or customer value. If you’re looking for a simple solution, Google Sheets has a built-in "Geo Chart" feature. Just organize your data with regions in one column and performance metrics in another, then use the geo chart tool to create a visual representation.

For more advanced analysis, Looker Studio (formerly Google Data Studio) offers powerful mapping tools. You can create filled maps that use colors to highlight geographic boundaries or bubble maps where circle sizes represent specific metric values. These visualizations make it easier to identify trends and quickly refine your campaigns.

How to Use Data for Campaign Optimization

Regional performance data is only as useful as the actions it inspires. Use your insights to make targeted adjustments:

Boost ad spend in regions where conversion rates are high, costs per lead are low, and customer lifetime value exceeds expectations.

Reallocate budgets from underperforming areas to higher-performing ones.

Tailor keyword strategies to reflect local preferences, adding region-specific terms.

Schedule ads during peak times for each region to capture higher-quality leads.

Focus on areas with fewer competitors or lower average review scores to establish a stronger market presence.

With search advertising spending in the U.S. expected to hit $137 billion in 2024 [16], and Local Services Ads accounting for 13.8% of all SERP clicks when displayed [17], geographic precision is more important than ever.

"Incorporating region-specific keywords within our SEO efforts has proven vital for climbing search engine rankings and maximizing our online visibility in target locations. This is the kind of actionable insight that can pivot a local marketing strategy to success." [18]

"Understanding local personas allows us to create marketing that feels personal and relevant. It's about making every customer feel seen and understood." - ProfileTree's Digital Marketing Team [18]

Combine these metrics to identify your most valuable markets. Keep in mind that regional performance can shift due to seasonal changes or economic factors. Regularly reviewing your data ensures your optimization strategies stay effective as conditions evolve.

Adding Regional Data to Campaign Management

Incorporating regional performance data into your campaign management system can significantly enhance lead generation, targeting, and monitoring efforts. Platforms that process Google Maps data automatically provide the tools needed for scoring leads, adjusting territories, and keeping campaigns on track in real time.

Automated Lead Generation and Scoring

Automated systems can transform Google Maps data into valuable lead scores by analyzing factors like competitor density, customer sentiment, and local market gaps. With over 1 billion people using Google Maps monthly to find services and businesses [2], the platform offers a massive pool of data for generating leads.

Take Cohesive AI as an example. This platform uses Google Maps data to identify leads for local service businesses such as HVAC providers, landscapers, and janitorial companies. It assigns lead scores based on regional factors - areas with fewer competitors and high search demand score higher than oversaturated markets.

Filters like location radius and business categories help refine the data [2]. After cleaning the dataset - removing duplicates and correcting errors - the platform provides actionable insights, pinpointing key competitors and identifying regions with high demand but limited supply [2].

This data-driven approach is gaining traction, with 63% of marketers increasing their budgets for analytics-based marketing [20]. Companies leveraging customer behavior insights have been shown to outperform competitors by 85% in sales growth [19]. These lead scores also guide strategic shifts in service regions, ensuring businesses focus their efforts where they matter most.

Adjusting Service Areas Based on Data

Using performance metrics to realign service areas can maximize your return on investment (ROI). Nearly 90% of marketers report higher sales through location-based marketing, with 86% noting customer growth and 84% experiencing increased engagement [23].

To optimize your service areas, analyze metrics like conversion rates, customer lifetime value, and cost per acquisition. If certain neighborhoods consistently perform better, consider expanding operations there while scaling back in less effective regions.

For instance, geo-targeted campaigns have helped businesses like downtown restaurants and local fitness studios increase foot traffic and memberships by 15–25% [21]. Similarly, excluding regions where your services aren’t offered can prevent wasteful ad spending [22]. Bid adjustments allow you to allocate more resources to high-performing areas while reducing investment in underperforming ones [22].

Real-Time Monitoring and Reporting

Integrating real-time data into your campaign management system ensures that adjustments remain accurate and timely. Real-time monitoring enables businesses to adapt quickly to market changes, boosting productivity by 63% and improving campaign effectiveness by 40% [24].

Modern dashboards simplify this process by presenting key metrics - such as lead quality, response rates, and conversion patterns - through visual reports like charts and graphs [24]. This makes it easy to identify regional trends and make immediate adjustments.

"When we shifted from gut-feel to data-backed strategies in our marketing, we saw a 40 percent increase in campaign effectiveness. The key is combining data insights with industry expertise for truly impactful decisions."

– Mary Zhang, Head of Marketing and Finance, Dgtl Infra [24]

AI-powered systems take this a step further by dynamically allocating budgets to optimize lead quality, ad placements, and campaign performance based on real-time data [26]. As market conditions shift, these systems ensure campaigns remain efficient and compliant.

Real-time monitoring has become a necessity for meeting customer expectations. Mindy Ferguson, Vice President, Messaging and Streaming at Amazon Web Services, highlights this shift:

"Anything outside of using real-time data becomes very frustrating for the end consumer and feels unnatural now. Having real-time data always available is becoming an expectation for customers. It's the world we're living in." [25]

To stay ahead, set up automated alerts for significant changes in regional performance, such as sudden drops in conversion rates or spikes in competitor activity. This allows you to respond quickly to market shifts and maintain a competitive edge across all your service areas.

Keeping Regional Data Current

Keeping regional data accurate and up-to-date is critical for running effective campaigns. When your data is current, it becomes a powerful tool for refining lead generation and managing campaigns across different areas. Since Google Maps data is updated frequently, relying on outdated information can lead to poor decisions that may hurt performance.

How Often to Update Your Data

Google updates business listings regularly and often without prior notice [27]. These updates tend to spike during certain times of the year, like November, December, and the weeks leading up to Easter, affecting details such as opening hours [27]. In fact, nearly 44% of Google Business Profile listings have experienced at least one update in the past three years [27]. To stay ahead, it’s essential to update your regional data routinely. This ensures that your campaign strategies are based on reliable, current information.

Google’s systems validate changes by cross-referencing multiple sources, including business websites, external platforms, public user contributions, and engagement data [27]. Regular updates not only improve the accuracy of your data but also help you track market trends as they evolve.

Tracking Market Changes

Keeping an eye on key metrics across regions can reveal important market shifts. Metrics like cost per lead, conversion rates, and lead quality are especially useful for understanding how changing conditions affect your campaigns [28]. Regional sales data can further highlight areas of growth or decline, enabling businesses to adjust strategies accordingly [29]. Seasonal trends also play a significant role - think of higher HVAC demand during extreme weather months or increased landscaping needs in spring and fall [28].

Cohesive AI offers tools to monitor these market changes effectively. By analyzing Google Maps data, it tracks factors like business locations, population density, competitor presence, and customer sentiment [2]. This approach helps pinpoint underserved areas, identify customer dissatisfaction, and uncover opportunities for partnerships. Additionally, analyzing operational patterns across industries and regions offers insights that can shape targeted marketing campaigns tailored to local trends [29].

Using Historical Data for Planning

While current data is essential, historical data provides a broader perspective for long-term planning and risk management. It helps identify which strategies have worked in the past and which haven’t, offering a roadmap for future decision-making [32]. Historical insights also aid in spotting economic cycles, seasonal patterns, and recurring trends, making forecasts more accurate [33]. Tracking performance indicators over time allows businesses to evaluate their overall progress comprehensively [32].

A great example of leveraging historical data comes from February 2025, when Blue BI developed a forecasting system for a pharmaceutical client. They used historical sales data to train forecasting models, selecting the best one and integrating additional inputs like past advertising campaigns. The system continuously updates forecasts as new data becomes available, ensuring accuracy [32].

Historical data can also enhance customer experiences by enabling personalized campaigns that build loyalty. It can even spark innovation by revealing areas where processes could be streamlined or new services introduced [32]. Organizations that embrace data-driven strategies are three times more likely to report major improvements in decision-making [34].

Historical trend analysis generally identifies three types of patterns: short-term trends lasting a few days to months, long-term trends spanning years or decades, and cyclical trends influenced by factors like economic shifts [31]. To make the most of historical data, conduct regular market research to uncover these patterns. Recurring surveys - whether monthly, quarterly, or annually - can help track trends over time, ensuring your regional insights remain actionable and relevant for future campaigns [30].

Conclusion

Google Maps scraping has become a game-changer for local service businesses looking to stay ahead in competitive markets. With more than 1 billion users relying on Google Maps every month to find services and 81% of Americans using it to gather information about local businesses, the data extracted from this platform offers immense potential for growth [2][38].

When businesses turn raw data into actionable strategies, the possibilities are endless. Google Maps scraping does more than just collect contact information - it provides detailed insights that reveal new market opportunities. This data allows businesses to craft highly targeted marketing campaigns, refine their local SEO strategies using extracted keywords, and even analyze customer sentiment to stay in tune with their audience [35][36].

"Google Maps is a major source of commercial leads, making reliable data crucial for success."

Al Jovayer Khandakar, Content Writer [37]

In fact, 77.4% of marketers express confidence in data-driven strategies and their long-term potential [41]. For industries like janitorial services, landscaping, HVAC, and catering, this approach means sharper market segmentation, more precise customer targeting, and a stronger competitive edge [40].

Cohesive AI takes this a step further by automating the entire lead generation process. Using Google Maps data, the platform identifies leads, finds business owner emails, generates personalized cold emails with AI, and manages automated outreach campaigns - all for a flat fee of $150 per month, with no long-term commitments. And with a promise of at least two interested responses per month, it offers an efficient alternative to traditional lead generation agencies while giving businesses more control over their regional data [39].

But the benefits go beyond immediate lead generation. With these insights, businesses can evaluate competitors, navigate regulatory challenges, and make informed decisions about entering new markets or expanding in existing ones. Access to real-time, regional data is no longer a luxury - it’s a necessity for businesses aiming to adapt and thrive in today’s fast-changing local service markets [40].

For those ready to embrace the potential of Google Maps scraping, the key lies in combining the right tools, ethical practices, and consistent data management. This approach not only supports sustained growth but also ensures businesses can run smarter, more targeted campaigns, allocate resources effectively, and secure a stronger foothold in increasingly competitive environments.

FAQs

What legal and ethical factors should I consider when scraping data from Google Maps?

When gathering data from Google Maps, it's essential to navigate both legal and ethical considerations carefully. Legally, while accessing publicly available data might seem acceptable, scraping often goes against Google’s Terms of Service (ToS), which explicitly forbid such activities. Violating these terms can result in serious consequences, like being banned from the platform or even facing legal challenges.

On the ethical side, responsible practices should always come first. Avoid putting undue strain on Google’s servers, and ensure any data collected is handled respectfully and used appropriately. Ideally, you should obtain explicit consent from data owners. At the very least, businesses must align their actions with legal standards and ethical principles to uphold trust and remain compliant.

How can businesses collect accurate and reliable data through Google Maps scraping?

To gather precise and dependable data through Google Maps scraping, businesses should keep a few essential practices in mind. First, choose reliable scraping tools that align with Google's terms of service. Many of these tools come equipped with features like rotating proxies, which help avoid IP bans and ensure smooth data collection.

Another crucial step is to keep your scraping tools or scripts updated regularly. Google Maps' structure can change over time, and staying up-to-date ensures your data extraction process remains effective. Additionally, verifying the scraped data against trustworthy sources - such as official business websites or verified databases - can help maintain accuracy and consistency.

By following these practices, businesses can enhance the reliability of their data, paving the way for more informed analysis and better decision-making in local service campaigns.

What key metrics should I focus on when using Google Maps scraping to analyze regional performance?

When leveraging Google Maps scraping to evaluate regional performance, it's essential to pay attention to business ratings, customer reviews, and location visibility. These factors offer a deeper understanding of customer satisfaction, competitive standing, and how easily potential customers can locate businesses online.

You should also monitor details like the number of businesses, contact information, and operating hours within a specific area. This information helps gauge market saturation and pinpoint potential growth opportunities. By analyzing these insights, businesses can fine-tune their local marketing strategies and enhance their efforts in attracting new leads.