Automated Data Validation for Government Filings

Local Services

Jan 15, 2026

Jan 15, 2026

AI-driven automated data validation catches filing errors, enforces compliance, and slashes processing time and costs for government filings.

Automated data validation helps businesses avoid costly errors in government filings by using AI to ensure accuracy, compliance, and efficiency. Manual processes often lead to mistakes - like formatting issues, outdated information, or duplicates - that delay permits, licenses, and compliance approvals. Automation solves these problems by providing real-time error detection, AI-powered anomaly checks, and scalable solutions for high-volume filings.

Key takeaways:

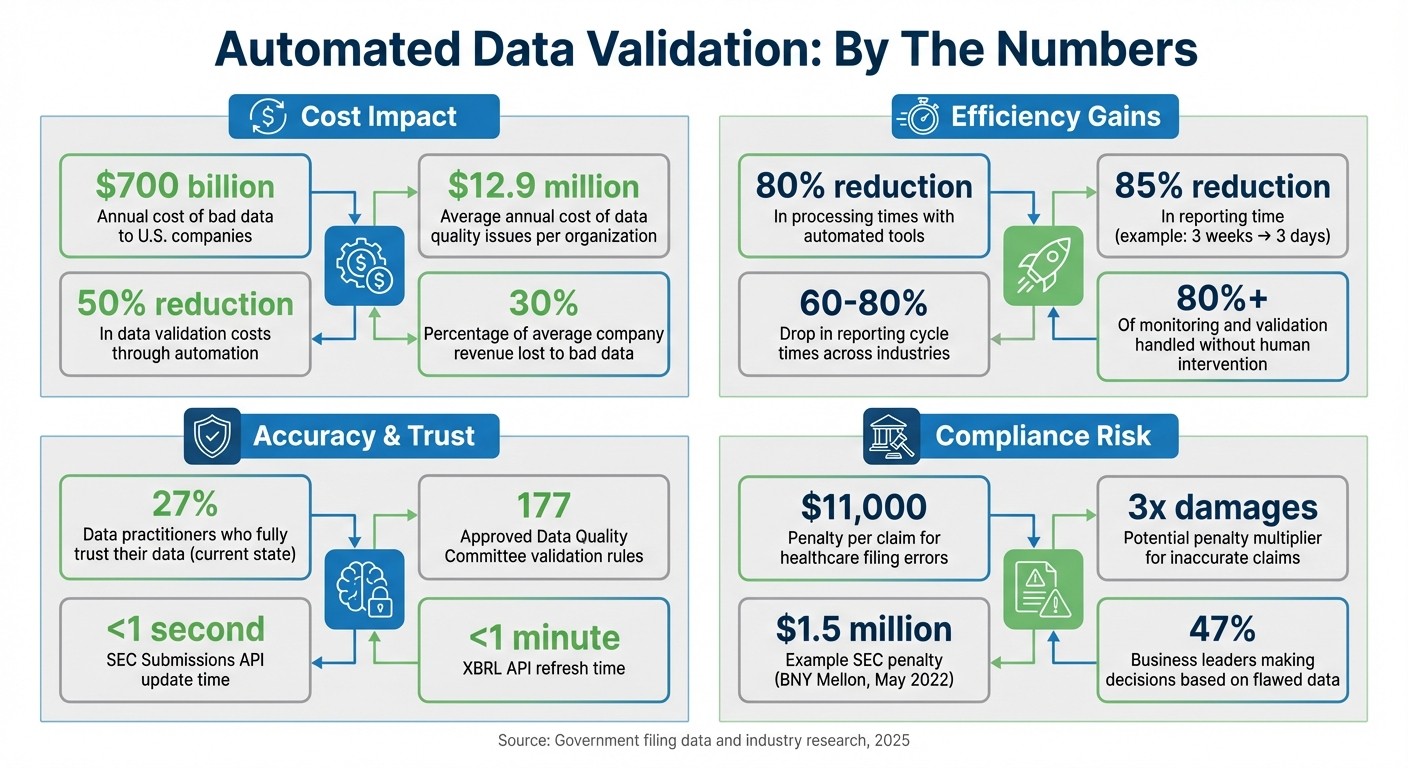

Cost Savings: Bad data costs U.S. companies $700 billion annually; automation reduces validation costs by up to 50%.

Efficiency: Automated tools cut processing times by up to 80%, ensuring faster approvals.

Improved Accuracy: AI catches errors like scaling mistakes, outdated taxonomy, and cross-field inconsistencies.

Compliance Assurance: Prevents penalties by ensuring filings meet strict regulatory standards.

For small businesses like HVAC contractors or landscapers, automation simplifies filings, reduces administrative burdens, and ensures compliance without the need for large teams. This technology turns a time-consuming process into a streamlined, error-free system, enabling businesses to focus on serving customers.

Key Benefits of Automated Data Validation for Government Filings

Common Problems in Processing Government Filings

Frequent Errors in Government Filings

Mistakes in government filings are often caused by avoidable errors. For instance, basic mathematical errors - like when assets fail to balance with liabilities and equity - are a common issue[6]. Formatting errors are another frequent culprit. Something as simple as entering "Amex" instead of the required "AMEX" for the American Stock Exchange can result in automatic rejection[2].

Scaling mistakes also create major headaches. A common example is when filers report common stock outstanding at values 100 times larger than intended due to incorrect unit reporting[6]. Date mismatches can occur when context dates go beyond the reporting period. Additionally, using outdated taxonomy elements can flag quality issues. Another recurring problem is the creation of custom extensions for state or country identifiers instead of sticking to standard taxonomy members, which causes inconsistencies across the system[6][4]. These errors often snowball during manual checks, further delaying the process.

Problems with Manual Validation

Manual validation adds another layer of complexity. It involves multiple rounds of data checks across different agencies, which can drag out resolution times. In some cases, it may take months to clean up errors and resubmit filings[8]. Caseworkers frequently face inefficiencies, such as logging into multiple portals to verify wage data, residency, or tax records, then manually transferring information between systems. This not only slows things down but also increases security risks[9].

"Avoidable errors cause lengthy delays delivering critical benefits while people scour for errors", SimpliGov reports[3].

As filing volumes increase, the costs of manual processing grow alongside them. More staff hours are required, making it an expensive solution for scaling operations[10]. Trust in the data is also a major concern - only 27% of data practitioners fully trust the information they work with[7]. Frequent changes to eligibility rules, such as differences between SNAP asset tests and Medicaid income thresholds, further complicate manual interpretation, often leading to inconsistent decisions for applicants[9].

Risks of Non-Compliance and Incorrect Data

The consequences of filing errors extend beyond operational inefficiencies. They can expose organizations to severe financial and reputational risks. For example, healthcare providers submitting inaccurate Medicare or Medicaid claims risk penalties of up to three times the actual damages, plus $11,000 per claim[13]. In May 2022, BNY Mellon Investment Adviser faced a $1.5 million SEC penalty for making misleading claims[14].

Inaccurate data doesn’t just hurt compliance - it can also lead to poor business decisions. Overstated revenue might result in over-hiring or misallocated resources[12]. Alarmingly, 47% of business leaders admit to making significant decisions based on flawed data or AI-generated errors[11]. For instance, in March 2025, the Southern District of New York denied a motion to dismiss a class action lawsuit against DocGo Inc. The company had misled investors about its "proprietary AI system", and its CEO’s misrepresentation of his educational background in computational learning played a key role in the case[14].

"The cost of getting it wrong isn't just embarrassment; it's measurable business impact", says Amani Undru, BI & Data Strategist at ThoughtSpot[11].

How Automated Data Validation Fixes Filing Problems

Real-Time Error Detection and Feedback

Automated systems are designed to catch filing errors as they happen. By validating submissions against strict formatting rules, these systems ensure mistakes are flagged immediately. For example, the SEC's EDGAR system processes Interactive Data (XBRL) filings through a Public Test Suite, categorizing issues as either "warnings" or "errors." Errors lead to an instant rejection of the filing, saving time and avoiding further complications[2][5].

The SEC's Submissions API is impressively fast, updating in less than a second, compared to XBRL APIs, which refresh in under a minute[4]. This rapid turnaround provides filers with detailed feedback and specific guidance to fix issues before finalizing their submissions[6].

Validation is supported by 177 approved Data Quality Committee (DQC) rules, which help ensure accuracy. For instance, Rule DQC_0004 checks that Assets equal the sum of Liabilities and Shareholders' Equity, while DQC_0018 flags outdated taxonomy elements[6]. These rules provide immediate, actionable instructions for corrections, creating a solid groundwork for further AI-driven analysis.

AI-Powered Anomaly Detection

AI and machine learning take error detection to the next level by spotting patterns and inconsistencies that might escape human reviewers. These tools perform checks to ensure that fundamental accounting equations hold true across filings[6]. For instance, they can catch "sign flip" errors, flagging negative values in data elements that should always be positive according to US GAAP or IFRS taxonomies[6].

Rule DQC_0091 focuses on outliers, flagging any percentage-type element with a value greater than 10, which often points to scaling errors[6]. Additionally, the system verifies aggregation for durational data, ensuring that monthly or quarterly sub-periods add up correctly to the total value reported for the full period[6].

"Automated validation by preparation software can make it more efficient for filers to verify compliance before sending their filings to EDGAR itself", according to the SEC[5].

AI tools also excel at identifying complex errors, such as "Anomalous Microsegments", where a small upstream issue impacts an entire group of data rather than just a single record[1]. This capability highlights how automation can handle intricate, interconnected problems that manual checks often miss. As filing volumes grow, these advanced detection tools demonstrate their ability to scale effortlessly.

Handling High-Volume Filings at Scale

The benefits of automation become especially clear when dealing with large volumes of data. While manually validating a single record can take several minutes, automated systems can process thousands of datasets in mere seconds[1]. This speed is a game-changer for small businesses that may lack the resources for large compliance teams.

Automated data quality management solutions can oversee more than 80% of the monitoring and validation process without requiring human intervention[1]. Unlike random spot-checks, which risk missing errors, automation ensures comprehensive coverage of all data[1]. Additionally, these systems can halt subsequent ETL processes to prevent errors from propagating into downstream repositories[1].

For small businesses, this approach means they can expand their filing operations without significantly increasing costs or error rates - making compliance more accessible and efficient.

Main Techniques in Automated Data Validation

Syntax and Format Validation

Automated systems ensure that every data field adheres to its required format before processing. For instance, state codes must be exactly two characters, Social Security numbers should consist only of digits, and dates need to follow the YYYY-MM-DD format[1][16]. The General Services Administration's Federal Audit Clearinghouse leverages JSON Schemas to validate complex identifiers like the 12-character Unique Entity Identifier (UEI). These schemas enforce strict rules, including regex patterns and format requirements for fields like currency, zip codes, and other structured data[15].

These format checks act as a safeguard, preventing invalid data from entering government systems. For example, the SEC's EDGAR system demands precise abbreviations for Self-Regulatory Organizations (e.g., AMEX, NYSE, or FINRA) and specific codes for document types. Submissions that fail to meet these criteria are immediately rejected, accompanied by clear correction instructions. By catching errors at this early stage, these checks ensure that raw data is accurate and ready for more advanced validation.

Duplicate and Completeness Checks

Once data passes format validation, automated systems perform thorough checks for duplicates and completeness to maintain accuracy. Tools use "Unique checks" to identify duplicate records and "Null checks" to confirm that all required fields are filled[1]. For example, the Federal Audit Clearinghouse applies patterns to Unique Entity Identifiers to prevent duplicate filings for the same period[1].

These systems also detect orphaned data - entries that lack necessary parent-child relationships[1]. Completeness checks ensure that all required fields in forms like Federal Awards sheets are populated before submission, reducing the risk of costly errors down the line[1].

Cross-Field Consistency Validation

After validating individual fields, cross-field consistency checks confirm that data relationships align with logical and real-world expectations. These validations ensure the integrity of filings by verifying that related data points make sense together. The XBRL US Data Quality Committee, for instance, has approved over 177 validation rules specifically designed for SEC filers[6].

"A user should never see an error from a JSON Schema. If that happens, we add a new intake validation with a user-centered, helpful error message." – GSA Federal Audit Clearinghouse Team[15]

Examples of cross-field validation include verifying that assets equal liabilities plus shareholders' equity, ensuring context dates do not exceed the reporting period's end date, and confirming that individual components add up to the reported total[6]. For W-2 forms, these checks might confirm that "Medicare Wages" (Box 5) exceeds "Wages" (Box 1)[16]. These validations catch errors that single-field checks might overlook, preventing flawed data from propagating through ETL processes and corrupting downstream systems[1].

Benefits of Automated Data Validation

Better Efficiency and Accuracy

Automated data validation dramatically cuts down the time needed to prepare government filings. For example, a mid-sized financial institution in April 2025 managed to reduce its quarterly reporting time from nearly three weeks to just three days after adopting automated validation - an 85% reduction[19]. Across industries, organizations have reported a drop in reporting cycle times by 60% to 80% after switching from manual to automated processes[19].

This approach enables real-time verification of filings, catching errors at the point of entry. By detecting mistakes immediately, it prevents them from cascading through the entire data pipeline[17][18]. This not only improves operational efficiency but also helps lower costs by minimizing the need for costly corrections down the line.

Lower Costs for Small Businesses

Manual data validation can drain resources, especially for small businesses. Data quality issues cost organizations $12.9 million annually[17], and globally, bad data results in losses exceeding $700 billion each year - equivalent to about 30% of an average company's revenue[1]. For small businesses like HVAC services, landscaping companies, or janitorial firms, these costs can be especially burdensome.

Automation helps by taking over tedious data-sifting tasks, allowing businesses to redirect their resources. Automated solutions can handle over 80% of the manual monitoring and checks[1], freeing up staff to focus on productive, billable work instead of administrative duties. Large-scale automation projects have shown they can cut data validation costs by 50%[1], making compliance more manageable and affordable for businesses that often struggle with these administrative demands.

Better Compliance and Decision-Making

The benefits of automated validation go beyond speed and cost savings - it also strengthens governance and compliance. Accurate data ensures businesses remain compliant, avoiding penalties and simplifying regulatory reviews. With a logged audit trail, organizations can streamline compliance certification processes[17][19]. For instance, in May 2019, the USDA Food and Nutrition Service partnered with the GSA to implement an API-based validation service for Form FNS-742. Program Analyst Whitney Peters highlighted:

"We are piggybacking on the process they created, and we can add many more checks than they currently employ without adding burden"[18].

This system allowed school districts to receive instant error notifications during data entry without requiring changes to their existing software.

Validated data also builds trust, which is critical for decision-making. Currently, only 27% of data practitioners fully trust the data they work with[7]. Automated validation creates a "single source of truth" across systems like payroll, inventory, and taxes[7][19]. With reliable data at their fingertips, business owners can make informed decisions instead of relying on uncertain figures.

Conclusion

Automated data validation takes the headache out of filings, turning what used to be a tedious, error-prone process into one that’s efficient and dependable. For local service businesses - whether you’re running an HVAC company, managing a landscaping crew, or overseeing a janitorial service - automation removes the need for painstaking manual error checks that can drag out the process for days or even weeks[3]. Instead, you get instant feedback during data entry, catching mistakes before they snowball into bigger problems.

Beyond just addressing common challenges, automation delivers immediate, tangible benefits. It’s not just about speeding things up - it’s about creating a reliable, real-time system that ensures compliance[19]. When your data is accurate and synchronized across all platforms, you can make decisions with confidence, backed by solid information rather than guesswork. As Jim Hliboki from 8020 Consulting explains:

"In today's complex regulatory landscape, maintaining compliance while ensuring efficiency isn't just a goal; it's a necessity for survival"[19].

The impact of automation is felt across industries. For small businesses operating on tight budgets, this means cutting costs and spending more time on revenue-generating activities instead of drowning in administrative work.

Timely and precise filings are essential, especially as government regulations become more intricate and frequent. Automation equips your business to handle growing filing demands without the need to hire extra administrative staff, allowing you to scale without creating bottlenecks[19]. On top of that, automation shifts reporting from a backward-looking task to a proactive, ongoing process where compliance policies enforce themselves in real time[20].

Start by automating your most critical data and controls, and then expand as you see the benefits[20]. Tools like Cohesive AI make it easy for local service businesses to adopt these validation techniques, enabling faster and more compliant filings. Investing in automation today not only safeguards your business against future compliance risks but also frees up your team to focus on what truly matters - delivering exceptional service to your customers.

FAQs

How does automated data validation enhance accuracy and compliance in government filings?

Automated data validation plays a critical role in ensuring that every detail in a government filing meets strict formatting and compliance standards, like those outlined by the SEC. By automatically reviewing dates, numeric entries, and identifiers, the system identifies potential errors before submission. This proactive approach minimizes the chances of non-compliance and eliminates the expense and hassle of resubmissions. It’s a safeguard against human mistakes and inconsistencies, enhancing overall accuracy.

On top of that, automated validation speeds up the filing process by delivering instant feedback on technical requirements, such as XBRL formatting and necessary contexts. This efficient method enables organizations to meet regulatory demands without sacrificing precision. By standardizing data, it not only ensures accurate filings but also strengthens ongoing compliance monitoring across U.S. government systems.

What types of errors can AI catch that manual reviews might overlook?

AI is highly effective in catching formatting inconsistencies, duplicate entries, and mismatched fields that might slip through during a manual review. It can also flag missing data, values that fall outside acceptable ranges, and schema validation errors, ensuring all data complies with established standards.

On top of that, AI shines in detecting subtle numeric or date discrepancies and other intricate errors that human reviewers might overlook. This not only boosts the accuracy of government filings but also streamlines the entire process.

What are the benefits of automated data validation for small businesses?

Automated data validation plays a crucial role in helping small businesses ensure their government filings are accurate and error-free. It works by automatically verifying that fields such as dates (formatted as MM/DD/YYYY), dollar amounts (e.g., $0.00), and ZIP codes (5 digits) follow the required formats. This process catches typos, missing details, and inconsistencies before forms are submitted, sparing businesses from costly re-filing fees, compliance headaches, and wasted time.

Beyond speeding up processes, automated validation improves the overall quality of data. It ensures forms are complete, standardized, and free of duplicates. These tools can even integrate with existing systems, like accounting software, to reconcile fields and highlight mismatches. For local service businesses - such as janitorial, landscaping, HVAC, and catering - platforms like Cohesive AI leverage this technology to verify business information, tailor outreach efforts, and streamline lead generation. The result? A smoother, more confident path to growth.